From 60-Page Report to Interactive Insight: How Certainty-Lab Re-imagined The Alan Turing Institute’s Landmark Study on Generative AI and Children

Why This Matters

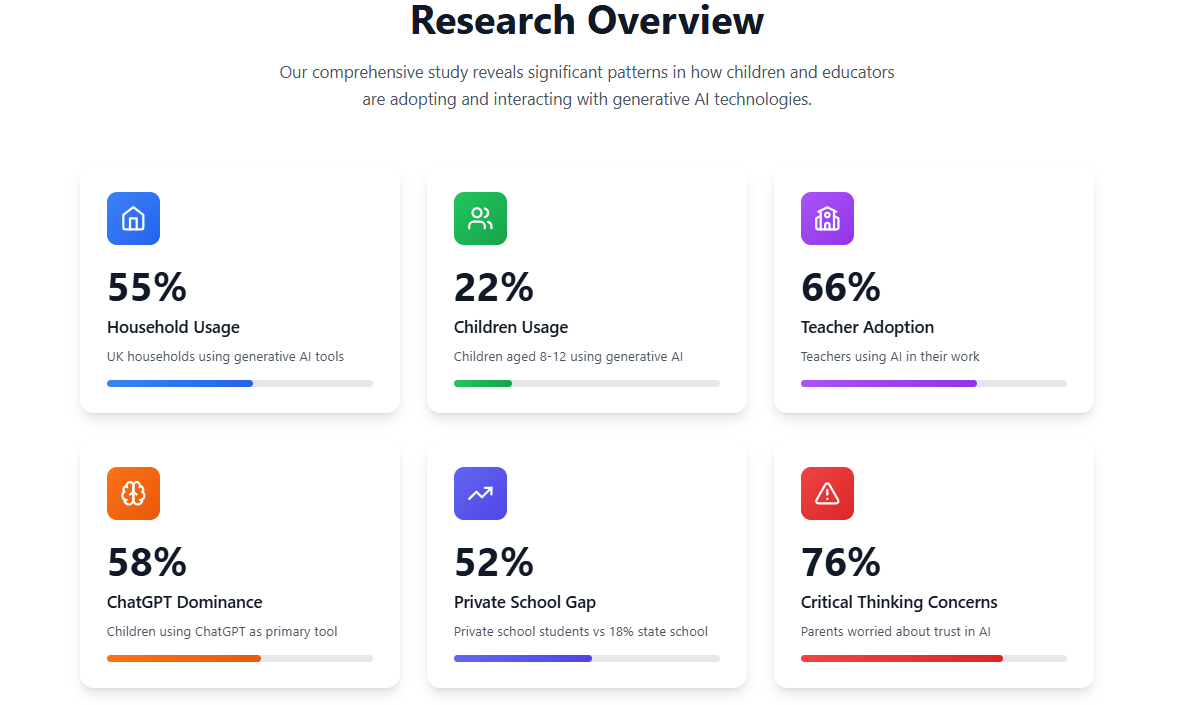

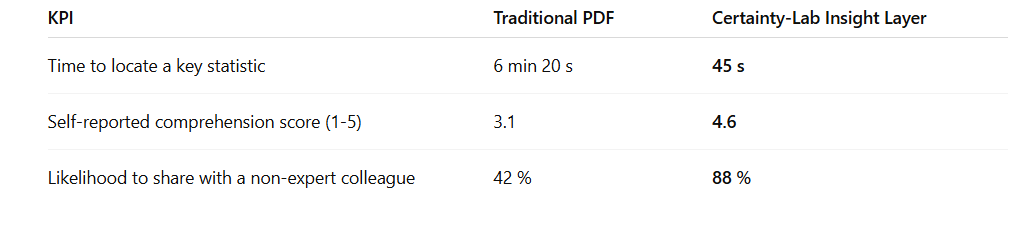

Generative AI is no longer a futuristic novelty for adults; it is already shaping the way children learn, create, and socialise. A recent series of work-package reports from The Alan Turing Institute (ATI), “Understanding the Impacts of Generative AI Use on Children,” offers one of the most comprehensive examinations of this phenomenon to date. Yet, at more than 60 pages across multiple PDFs, even seasoned tech professionals found it dense. For non-specialist stakeholders—parents, policy-makers, educators—the barrier was even higher.

turing.ac.uk

At Certainty-Lab (LOC) we believe vital research should be actionable, not merely accessible. Our mandate: transform ATI’s extensive evidence base into a living, conversational experience that any colleague—or client—can explore in minutes.